Can AI outperform medical professionals in diagnosis?

- Mia Hatton

- Archive

- October 28, 2019

Table of Contents

Last year the Guardian - link no longer works reported that AI is 'equal to humans in medical diagnoses' when interpreting images, referring to a study published in Lancet Digital Health. The study revealed that AI 'deep learning' systems were able to detect disease 87% of the time and correctly gave the all-clear in 93% of cases (the equivalent success rate in healthcare professionals is 86% and 93%). This means that AI in healthcare is on track to support medical professionals, leading to faster, cheaper diagnoses and drug development. This will allow healthcare professionals to achieve more with their time and help more people.

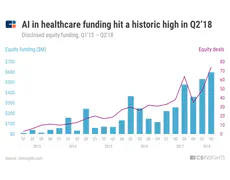

AI in healthcare has made great strides in recent years not least because of a boom in funding. As well as a steep rise in private funding since 2013 (see below), AI for healthcare is seeing support from governments. This year the UK government allocated £250 million to AI development - link no longer works "to help solve some of healthcare’s toughest challenges".

But before AI can start using data to solve problems, there is a range of challenges to overcome with the data itself. Deep learning algorithms must be trained to understand images using enormous datasets. For example, an algorithm that is shown thousands (if not tens of thousands) of MRI scans, labelled as 'tumour' and 'not tumour', will learn how to classify new scans with the same labels. It is this - the training stage - where even the highest-funded healthcare startup can run into problems.

Where do these thousands of MRI scans come from, and what does it mean for patient privacy? And how do you train an algorithm to recognise a rare disease for which thousands of diagnostic MRI scans do not exist? Finally, how reliable are the labels on your training data? This final question provides some food for thought when contemplated alongside the Guardian article above. Is human-generated training data the reason why AI models are only 'as good' as humans?

In their report of March this year, CB insights - link no longer works revealed some of the ways in which AI is transforming healthcare data to address these questions.

Keeping patient data private

If you are an Android user, you have probably experienced a federated learning algorithm via Google's Gboard - link no longer works (Google keyboard). Federated learning separates the machine learning from the training data. The training device accesses the machine learning algorithm from a centralised location, trains it using local data (in Google's case, your inputs to the keyboard), then sends a summary of the training back to the algorithm, without sending any of the actual data. This allows Google to offer good predictive text options without storing every word you type. CB insights highlighted how OWKIN - link no longer works is applying this approach in healthcare by keeping patient data localised. What this means is that healthcare data from across the world can be used to train AI without actually handing the data over to a central location.

Getting enough data

Building enormous, transportable datasets will be key to applying AI to the diagnosis of rare conditions. Apple's - link no longer works suite of healthcare technologies is making it easier for researchers to conduct studies and for patient data to be gathered passively (for example, by the Apple Watch). Significantly, the tools have empowered researchers and start-ups to generate open-source datasets for further study, and potentially for training.

Using accurate training data

CB Insights report that collaboration is key to well-trained AI algorithms. Enormous datasets labelled by healthcare professionals have been made publicly available so that they can be used to improve AI-driven diagnoses. An interesting approach taken by DeepMind - link no longer works was to have data labelled by a group of junior healthcare professionals, sending only the data whose labels were in conflict to a senior specialist. This approach allows high accuracy to be attained efficiently.

Read the full report from CB Insights here - link no longer works.Read more about AI in healthcare in healthcare.